- India

- International

The 360° UPSC Debate: Should Artificial Intelligence Be Regulated?

Whether artificial intelligence (AI) should be regulated is a complicated question. While there are many different viewpoints on AI regulation, some advocate comprehensive regulation or control while others argue that just partial regulation is presently necessary. While some people agree on control, they still differ on how much control should be imposed.

Whether artificial intelligence (AI) should be regulated is a complicated question. but still how much it should be regulated?

Whether artificial intelligence (AI) should be regulated is a complicated question. but still how much it should be regulated? Attention Please:

Dear Readers,

Preparation for the UPSC Examination requires studying multiple newspaper articles on a single topic, while at the same time we experience a paucity of time. Covering all the articles on our own is quite tedious. Thus, we are coming up with a new initiative called the Indian Express 360° UPSC Debate.

The 360° UPSC Debate: Should Artificial Intelligence Be Regulated? covers all the dimension after sifting through multiple articles. This initiative and the topics covered will immensely help students in enhancing their in-depth knowledge as well as their answer writing for the upcoming civil service main examination.

Why are we debating this issue in the first place?

Whether artificial intelligence (AI) should be regulated is a complicated question. While there are many different viewpoints on AI regulation, some advocate comprehensive regulation or control while others argue that just partial regulation is presently necessary. While some people agree on control, they still differ on how much control should be imposed. Here we will discuss Should Artificial Intelligence Be Regulated?

What sparked this debate?

The Centre for AI Safety (CAIS) recently came up with a brief statement aimed at sparking conversation about potential existential threats posed by artificial intelligence (AI). Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war, according to the one-sentence statement. More than 350 AI CEOs, academics, and engineers signed on to the statement. Top leaders from three of the largest AI start-ups – Sam Altman, CEO of OpenAI, Demis Hassabis, CEO of Google DeepMind, and Dario Amodei, CEO of Anthropic – were present. The announcement comes at a time when there is rising worry about the possible dangers of artificial intelligence.

Is Artificial Intelligence a Human-stein Monster?

“Artificial intelligence ‘hacked’ human civilization’s operating system“

According to Sam Altman, CEO of the artificial intelligence company that developed ChatGPT, government action will be vital in limiting the risks of increasingly powerful AI systems. As this technology advances, we understand that people are concerned about how it will affect how we live. Altman recommended establishing a US or global organisation that have the authority to licence or de-license the most powerful AI systems and have the authority to take that licence away and ensure compliance with safety standards. When asked about his greatest worry regarding artificial intelligence, Sam Altman opined that the sector might inflict ‘significant harm to the world’ and that ‘if this technology goes wrong, it can go quite wrong’. He advocated that a new regulatory agency enforce protections to prevent AI models from ‘self-replicating and self-exfiltrating into the wild’, implying future fears about powerful AI systems that could lure humans into losing control.

Yuval Noah Harari, the Israeli philosopher and author of Homo Deus and Sapiens: A Brief History of Mankind, recently argued in a leading publication that artificial intelligence ‘hacked’ human civilization’s operating system. He claims that humanity has been plagued with AI dread since the dawn of the computer age. However, he believes that newly developed AI technologies in recent years may endanger human civilization in an ‘unexpected direction’. He discussed how AI could affect culture, taking up the case of language, which is fundamental to human culture. This is how his argument goes. Language is the foundation of almost all human culture’. Human rights, for example, are not part of our genetic code. They are, instead, cultural products that humans made by telling stories and creating laws. Gods are not physical beings. They are cultural artefacts that humans constructed by inventing myths and authoring scriptures. Harari also claimed that democracy is a language that focuses on meaningful discussions, and that when AI hacks language, it has the potential to undermine democracy.

Yuval Noah Harari went on to say that the rise of artificial intelligence is having a tremendous impact on society, influencing different facets of economics, politics, culture, and psychology. The most the difficult task of the AI era was not the development of intelligent tools, but rather creating a balance between humans and machines. Harari has discussed how AI could build close ties with humans and influence their judgements. ‘Through its mastery of language, AI could even form intimate relationships with people and use the power of intimacy to change our opinions and worldviews,’ he writes. He used the case of Blake Lemoine, a Google worker who was fired after publicly stating that the AI chatbot LaMDA had become sentient. The historian claims that the contentious allegation cost Lemoine his job. If AI can persuade people to jeopardise their jobs, what else can it persuade them to do?

Prof. Gary Marcus, an AI specialist, pointed out that tools like chatbots might subtly affect people’s beliefs far more than social media. Companies that select which data gets into their large language models (LLMs) have the potential to alter civilizations in subtle and significant ways. According to a larger Pew Research Centre research, 62% of Americans believe AI will have a significant impact on jobs over the next 20 years. Their main fear is the use of artificial intelligence in hiring and firing processes, which 77% of respondents oppose.

‘AI is not intelligence and idea that AI will replace human intelligence is unlikely’

“Human intelligence is transferable, but machine intelligence is not”

AI is not intelligence, it is prediction, according to the World Economic Forum. “We’ve noticed an increase in the machine’s capacity to accurately forecast and execute a desired outcome with huge language models. However, equating this with human intelligence would be a mistake. This is evident when looking at machine learning systems, which, for the most part, can still only accomplish one task very well at a time. This is not common sense, and it is not equal to human levels of thinking that allow for easy multitasking. Humans can absorb information from one source and apply it in a variety of ways. In other words, human intelligence is transferable, but machine intelligence is not,” they say.

According to the World Economic Forum, “AI has enormous potential to do good in a variety of sectors, including education, healthcare, and climate change mitigation.’ FireAId, for example, is an AI-powered computer system that predicts the possibility of forest fires based on seasonal variables using wildfire risk maps. It also assesses wildfire danger and intensity to aid in resource allocation. AI is applied in healthcare to improve patient care through more personalised and effective prevention, diagnosis, and treatment. Healthcare expenditures are being reduced as a result of increased efficiencies. Furthermore, AI is poised to substantially alter — and presumably improve — elder care.

The World Economic Forum again goes on to say that, “exaggerations about AI’s potential largely stem from misunderstandings about what AI can actually do. Many AI-powered machines continue to hallucinate, which means they make a lot of mistakes. As a result, it is unlikely that this sort of AI will replace human intelligence. Another barrier to AI adoption is that AI systems obtain their data from unrepresentative sources. Because the vast bulk of data is generated by a subset of the population in North America and Europe, AI systems tend to mirror that mindset. ChatGPT, for example, mostly uses the written word from those regions. Meanwhile, approximately 3 billion individuals still do not have regular internet access and have not contributed any data.”

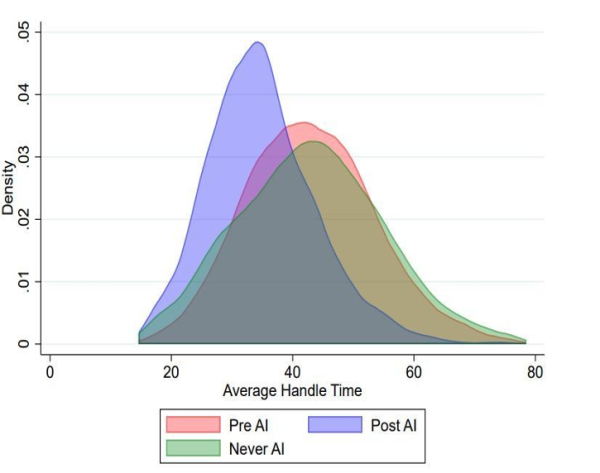

Some experts also contend that AI technology is still in its infancy and cannot yet constitute an existential danger. When it comes to today’s AI systems, they are more concerned with short-term issues, such as biased and inaccurate responses, than with long-term concerns. Researchers at the Massachusetts Institute of Technology (MIT) and Stanford University have been investigating worker productivity by utilising generative AI to assist them with their work. The study, which evaluated the performance of over 5,000 customer care employees, discovered that workers were 14% more productive when utilising generative AI tools. According to the study, pairing workers with an AI assistant was far more beneficial with rookie and low-skilled personnel. The influence of technology on highly skilled personnel was negligible.

Pic: Customer service agents working with AI were able to handle 14% more issues per hour(Image: Stanford University/MIT/NBER)

Pic: Customer service agents working with AI were able to handle 14% more issues per hour(Image: Stanford University/MIT/NBER)

In his article, Vikram Mehta writes: Why we can’t ‘pause’ AI says that: “As I said, I am not clear where to pitch my flag. But I sense the real problem is not the unbridled momentum of AI. It is the international community’s inability to look beyond narrow jingoistic interests towards a collaborative effort to address the problems of the global commons. “Pause” on AI will not solve this underlying problem. On the contrary, it may exacerbate it by diminishing technologies’ talismanic power”.

If regulated, then what are the risks associated with regulating AI?

“AI systems that produce biased results have been making headlines”

The majority of the time, the issue with regulating AI arises from the data utilised to train it. If the data is distorted, the AI will acquire it and may even exaggerate the bias. According to the Harvard Business Review, “AI systems that produce biased results have been making headlines.” Apple’s credit card algorithm, for example, has been accused of discriminating against women, prompting an investigation by New York’s Department of Financial Services. The issue of controlling AI appears in many other forms, such as pervasive online advertisement algorithms that may target viewers based on ethnicity, religion, or gender.”

According to a recent study published in Science, risk prediction systems used in health care, which affect millions of people in the United States each year, reveal significant racial prejudice. Another study, published in the Journal of General Internal Medicine, discovered that the software utilised by top hospitals to prioritise kidney transplant recipients was biased towards black patients. In theory, it may be able to programme some notion of fairness into the software, mandating that all outcomes meet specific criteria. Amazon, for example, is experimenting with a fairness statistic known as conditional demographic disparity, and other companies are working on similar criteria.

However, there is no universally accepted definition of fairness, and it is impossible to be categorical about the broad circumstances that determine equitable outcomes. Furthermore, the parties involved in any given circumstance may have quite different ideas on what constitutes fairness. As a result, any attempts to incorporate it into the software will be difficult.

AI regulation-How much is too much?

“AI technology is still developing, it is challenging to provide a clear legal definition”

Proposals to regulate AI have been put forth in both hard-law and soft-law forms. Legal experts have pointed out that implementing strict legal measures for regulating artificial intelligence poses significant difficulties. The field of AI technology is facing a significant challenge as it continues to evolve at a rapid pace. This has resulted in a ‘pacing problem’ where conventional laws and regulations are struggling to keep up with the emergence of new applications and the associated risks and benefits they bring. The diversity of AI applications poses a challenge to regulatory agencies with limited jurisdictional scope. According to legal scholars, soft-law approaches to regulating AI are gaining traction as a viable alternative. The reason being that soft laws can be more easily tailored to accommodate the rapidly evolving AI technology and its emerging applications. Soft-law approaches, while commonly used, are often criticised for their limited enforcement potential.

A statement organised by the Future of Life Institute and reportedly signed by hundreds of scientists, technocrats, businessmen, academics, and others calls for a six-month pause on the creation of neural language models. Elon Musk, who paradoxically was a co-founder of Open AI, is among the signatories, as are other founders such as Apple co-founder Steve Wozniak and Israeli philosopher and novelist Yuval Noah Harari. The letter’s fundamental point is that continuing unrestricted development of such language models could result in ‘human competitive intelligence’ that, if not governed by governance procedures, could represent a ‘profound risk’ to humanity. Work should be put on hold until such protocols are in place.

Regulation of AI is difficult, according to Cason Schmit, Assistant Professor of Public Health at Texas A&M University. You must first define AI and comprehend the projected benefits and risks of AI in order to properly manage it. To determine what is covered by the law, it is crucial to define AI legally. But because AI technology is still developing, it is challenging to provide a clear legal definition. ‘Soft laws’ are an alternative to the more conventional ‘hard law’ methods of legislating to stop particular breaches. A private organisation establishes guidelines or standards for participants in an industry in the soft law approach. These are subject to change more quickly than traditional laws. Soft regulations are therefore advantageous for emerging technology since they can easily adjust to new applications and dangers. Soft legislation, however, may also result in lax enforcement.

According to the Cyberlaw Clinic at Harvard Law School, regardless of how clever and novel and contemporary AI technology is, governments regulating emerging technologies is nothing new. Throughout history, governments have regulated emerging technologies with varying degrees of success. Automobile regulation, railway technology regulation, and telegraph and telephone regulation are a few examples. AI systems, like these other technology, are employed by humans as tools. The societal impact of AI systems is largely determined not by the complicated code that underpins them, but by who uses them, for what goals, and on whom they are used. And all of these things are controllable. The successful regulation of new technology in the past suggests that we should concentrate on its impacts and applications.

According to James Broughel, author of Regulation and Economic Growth: Applying Economic Theory to Public Policy, regulation should be based on evidence of harm rather than the mere prospect of harm. We don’t have much hard proof that unaligned AI poses a serious risk to humanity, other than speculation about how robots will take over the globe or computers will transform the earth into a gigantic paperclip. There may be little, if any, benefits to regulation if there is little or no evidence of a problem. Another reason to be wary of regulation is the cost. AI is a new technology that is still in its infancy. Because we still don’t fully understand how AI works, attempts to regulate it might quickly backfire, restricting innovation and impeding development in this fast evolving sector. Any laws that are enacted are likely to be adapted to existing practises and players. That makes no sense when it is unclear which AI technologies will be the most successful or which AI players will dominate the business.

India’s Response to demands for AI Regulation

“India will do “what is right” to protect its digital nagriks and keep the internet safe and trusted for its users in the upcoming Digital India framework”

Rajeev Chandrasekhar, Minister of State, Ministry of Electronics and Information Technology, stated after assuming the Chair of the Global Partnership on Artificial Intelligence (GPAI), an international initiative to support responsible and human-centric development and use of artificial intelligence (AI), “We will work in close cooperation with member states to put in place a framework around which the power of AI can be exploited for the good of citizens and consumers.”

The Minister stated that India is constructing an ecosystem of modern cyber laws and regulations driven by three boundary conditions of openness, safety, trust, and accountability, emphasising that AI is a dynamic facilitator for moving forward existing investments in technology and innovation. With a National AI Programme in place, a National Data Governance Framework Policy in place, and one of the world’s largest publicly accessible data sets in the works, the Minister reaffirmed India’s commitment to the efficient use of AI for catalysing an innovation ecosystem around AI capable of producing good, trusted applications for our citizens and the world at large.

The Minister of State for Information Technology and Electronics, who is overseeing a mammoth operation involving extensive engagement with stakeholders to frame the draft Digital India Act, which will replace the two-decade-old IT Act, stated that India has its own ideas on “guardrails” that are required in the digital world. Union Minister Rajeev Chandrasekhar stated that India will do “what is right” to protect its digital nagriks and keep the internet safe and trusted for its users in the upcoming Digital India framework, which will include a chapter devoted to emerging technologies, particularly artificial intelligence, and how to regulate them through the “prism of user harm”.

For any queries and feedback, contact priya.shukla@indianexpress.com

The Indian Express UPSC Hub is now on Telegram. Click here to join our channel and stay updated with the latest Updates.

Apr 24: Latest News

- 01

- 02

- 03

- 04

- 05